AVOD with Unified Packager

Learn how to process VOD content for frame-accurate ad insertion, using the Unified Packager and Remix

This page explains how to process VOD assets by transcoding them with FFmpeg and packaging them into streams with the Unified Streaming solution.

The example will generate segmented outputs for both HLS (with TS segments) and DASH (with fMP4 segments). A segmented output is required to allow the server-side manifest manipulator to insert ad segments in the relevant places.

To enable frame-accurate insertion of ads by broadpeak.io at relevant cuepoints and thereby ensure harmonious transitions between the content and the ads, devoid of glitches or awkward jumps, the process will ensure that the content is properly conditioned. We will assume that the timing of appropriate cuepoints for the asset is coming from an external source, such as a CMS. All elements in the chain (FFmpeg, Unified Streaming and broadpeak.io) need access to that timing information.

Demo AssetThis example uses the standard open-source Tears of Steel 12-minute asset from Blender Studio. With manual work, the author determined that appropriate cuepoints for mid-rolls that avoid cutting in the middle of scenes are 01:19.920, 04:17.920, 09:48.400.

High-level Workflow

The following steps will be required to define the full service:

1. Transcoding with FFmpeg

The source asset is transcoded into an audio and video ladder, with independent renditions. In video renditions, IDR frames are inserted at the cue points, which will allow the packager to splice the stream at those exact points.

2. Packaging with Unified Packager and Remix

We use the Unified Packager to split the media streams (audio, video, subtitles) into individual TS and fMP4 segments, and generate HLS and DASH manifests.

In order to ensure that the cuepoints are taken into consideration to enable frame-accurate ad insertion, we also use Unified Remix to provide Packager with timing information.

The output is stored on the origin.

3. broadpeak.io configuration

An Asset Catalog source is created in broadpeak.io to point to that origin.

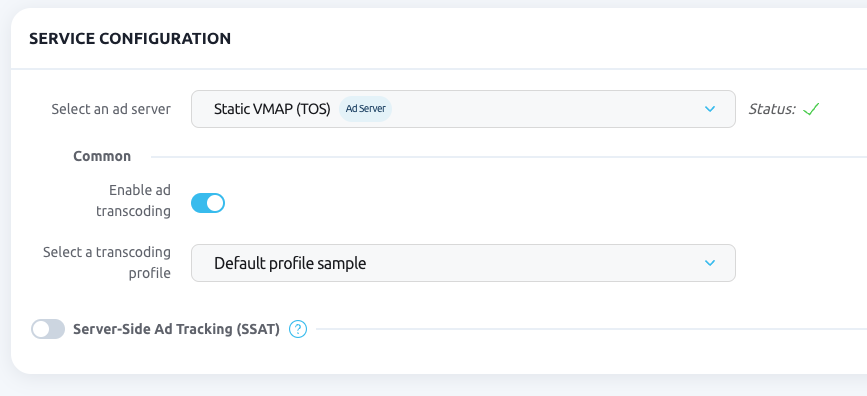

To help with testing, we create a static VMAP file with an ad schedule, which is used in the broadpeak.io solution as an Ad Server.

Finally, a Dynamic Ad Insertion AVOD service is created, which connects the Asset Catalog to the Ad Server.

4. Delivery

For optimized delivery, a CDN distribution should be used to only route manifest requests from your player to broadpeak.io.

But as a starting point, you can test your stream in the broadpeak.io Web App.

Pre-requisites

- Naturally, you need source files for at least one VOD asset, and information about timing for ad opportunity placement.

- You need a broadpeak.io account.

- You need a Transcoding Profile in your account that matches the encoding ladder that you configure in FFmpeg. Ask your broadpeak.io representative for help if you don't have a suitable one ready.

Default Profile

The example below uses a profile that is compatible with the default samples that you can add to your account within the Web app (by clicking the Create Samples button in the Sources view), or via API

- You need a Transcoding Profile in your account that matches the encoding ladder that you configure in FFmpeg. Ask your broadpeak.io representative for help if you don't have a suitable one ready.

- You also need to have a license key that allows you to use the Unified products. That key must give you rights of use of at least the following features:

- Packaging: HLS, DASH and CMAF

- Remixing: VOD

- Metadata: Timed Metadata

You can obtain a trial key that scopes those features on the Unified Streaming website.

- You need a storage solution to act as the Origin, into which you will upload the outputs of the packager. A static origin is sufficient, such as an AWS S3 or Google Cloud Storage bucket. It needs to allow public read access.

- A local installation of FFmpeg. Instructions can be found online.

- The easiest way to use Unified products are through Docker. You therefore need a working version of Docker on your machine.

Step by Step

0. Setup

Before you can use any of the Unified tools, you will need to define an environment variable which will be passed to the Unified Docker container

export UspLicenseKey=ZmFicmUubGFtYmVhdU...You may also want to download the Unified images - but if you don’t, it will get done automatically the first time you try to use one

docker pull unifiedstreaming/mp4split:latest

docker pull unifiedstreaming/unified_remix:latest1. Transcode

1.a. Video renditions

The first step is to transcode the source video into multiple video-only renditions, at different bitrates and resolutions, as is standard practice when creating ABR streams.

ffmpeg -y -i source/TOS-original-24fps-1080p.mp4 \

-an \

-c:v libx264 -profile:v high -level:v 4.0 -b:v 5000000 -vf scale=-2:1080 \

-r 25 \

-g 48 -sc_threshold 0 \

-force_key_frames 69.92,257.92,588.4 \

output/TOS_h264_1080p_5000k.mp4Let’s clarify what that command instructs ffmpeg to do:

-c:v libx264 -profile:v high -level:v 4.0 -b:v 5000000 -vf scale=-2:1080provides the main specs for the output: H264 with “high” profile and level 4, with a target bitrate of 5 Mbps, and scaled at 1080p (in a way to retain the source aspect ratio). Standard stuff, really.-r 25forces the output frame rate to be 25 fps-g 48defines the GOP size, to be 48 frames. FFmpeg will insert IDR frames at the start of each GOP.-sc_threshold 0prevents FFmpeg from detecting scene changes and inserting other i-frames.-force_key_frames 69.92,257.92,588.4tells FFmpeg to insert other IDR frames at specific time points. Those are our future splice points.-antells it to ignore the audio tracks. They will be treated individually.

Why not using 2-second GOPs?To guarantee that audio and video segments are perfectly aligned, something that is usually recommended, but particularly critical when the stream will be modified to insert ads, we need to choose a segment size that is compatible between the video frame rate and the audio sample rate. At 25 fps and 48 kHz AAC, the closest value to 4 seconds is 3.84s (which is 96 video frames). Because I would like 2 GOPs per segment - a personal choice - the GOP will therefore be 48 frames or 1.92s. Check this handy calculator if you use other specs for your media.

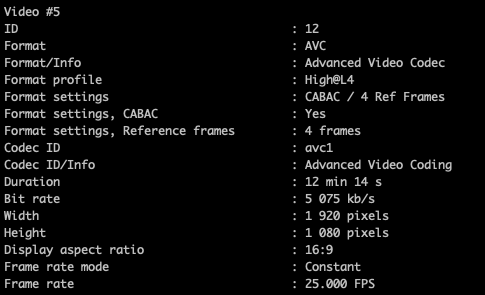

Using a simple GOP analyser script (such as this one), we can easily validate that the output has been correctly prepared and conditioned. Almost all GOPs contain 48 frames and starts with an IDR frame, apart from the one before a splice point, which is adjusted accordingly. Notice that the start time of the next GOP corresponds to our first cuepoint (69.92s = 1 min 9s 920ms)

Repeat this FFmpeg command for every video rung in the ladder you want to transcode to, adjusted accordingly.

ffmpeg -y -i source/TOS-original-24fps-1080p.mp4 -an -c:v libx264 -profile:v high -level:v 3.2 -b:v 3200000 -vf scale=-2:720 -r 25 -g 48 -sc_threshold 0 -force_key_frames 69.92,257.92,588.4 output/TOS_h264_720p_3200k.mp4

ffmpeg -y -i source/TOS-original-24fps-1080p.mp4 -an -c:v libx264 -profile:v main -level:v 3.1 -b:v 2300000 -vf scale=-2:480 -r 25 -g 48 -sc_threshold 0 -force_key_frames 69.92,257.92,588.4 output/TOS_h264_480p_2300k.mp4

...

Adjusting cuepointsTo avoid issues and unwarranted side effects, it is probably a good idea to ensure that the cuepoint time is compatible with the video frame rate. Otherwise FFmpeg may well choose the wrong frame. A simple formula for this is

ceiling(time * framerate) / framerate

1.b. Audio renditions

Next, we need to treat the audio tracks.

ffmpeg -y -i source/TOS-original-24fps-1080p.mp4 \

-vn \

-c:a aac -ar 48000 -b:a 128000 -ac 2 \

-metadata:s:a:0 language=eng \

output/TOS_aac_128k_eng.mp4-c:a aac -ar 48000 -b:a 128000 -ac 2indicates that we want to generate an AAC stream at 128 kbps and stereo (2 channels) at a (standard) sample rate of 48 kHz. That sample rate is worth setting explicitly even if it’s the default, as it’s critical to ensure proper alignment between audio and video segments down the line.-vntells FFmpeg to ignore the video track.-metadata:s:a:0 language=engwrites the audio language as metadata into the stream.

Note that there is no need to use the -force_key_frames flag for audio tracks (there’s no such thing as a keyframe or IDR frame in audio streams).

Language CodesIt’s a good idea to use 3-letter language codes (from the ISO 639-2 specification) instead of 2-letter codes, as some of the tools in this playbook may not correctly recognise them.

If you have multiple audio languages, treat them the same way. Here they come from different source files.

ffmpeg -y -i source/TOS-dubbed-fr.mp3 -vn -c:a aac -ar 48000 -b:a 128000 -ac 2 -metadata:s:a:0 language=fra output/TOS_aac_128k_fra.mp4

ffmpeg -y -i source/TOS-dubbed-de.mp3 -vn -c:a aac -ar 48000 -b:a 128000 -ac 2 -metadata:s:a:0 language=deu output/TOS_aac_128k_deu.mp4

...2. Prepare subtitles

If you don’t have subtitles, you can simply and safely skip this step. If you do, and they come in a sidecar format (such as SRT or VTT), you will need to have them transformed to an ISOBMFF containerised format (hear: MP4), usually referred to as an ISMT or CMFT file.

We’ll use the Unified Packager to perform that step, through Docker:

docker run --rm \

-e UspLicenseKey \

-v `pwd`/source/:/input -v `pwd`/output:/data \

unifiedstreaming/mp4split:latest \

-o /data/TOS_vtt_eng.ismt \

/input/tears-of-steel-en.srt --track_language=engdocker run --rm -e UspLicenseKey -vpwd/source/:/input -vpwd/output:/data unifiedstreaming/mp4split:latesttells Docker what image to run, how to map local folders into the container, and how to pass the environment variable that contains the Unified license key.-o /data/deu.ismttells Unified Packager the output format (ISMT) and where to store it/input/tears-of-steel-en.srt --track_language=engtells Unified Packager what input file to use, and what track language to associate with it.

If you have multiple subtitles, repeat the command accordingly:

docker run --rm -e UspLicenseKey -v `pwd`/source/:/input -v `pwd`/output:/data unifiedstreaming/mp4split:latest -o /data/TOS_vtt_deu.ismt /input/tears-of-steel-de.srt --track_language=deu

...

Parameter orderThe order of the parameters to the

mp4splitprogram is significant. The output file is defined first, before the inputs and their parameters.

3. Remix

In the final step, we will package the content into segments, that will be referenced in HLS and DASH manifests, using the Unified Packager. However, we need to ensure that the segments take into consideration the cuepoints, and that we have segments that start and end at the splice points.

To pass that information to the packager, the simplest way (in the author’s experience) is to use the Unified Remix solution, and generate a SMIL file that contains the appropriate instructions.

This SMIL document contains a playlist of sorts, that defines:

- The list of rendition files (for all media types)

- A list of signals that define where to splice the stream. They are defined using MPEG-DASH

Eventnodes with SCTE35 information, one for each cuepoint, with thepresentationTimeexpressed in milliseconds.

<?xml version='1.0' encoding='utf-8'?>

<smil xmlns="http://www.w3.org/2001/SMIL20/Language">

<body>

<seq>

<par>

<video src="TOS_h264_1080p_5000k.mp4" />

<video src="TOS_h264_720p_3200k.mp4" />

<video src="TOS_h264_480p_2300k.mp4" />

<!-- other video renditions -->

<video src="TOS_aac_128k_eng.mp4" />

<video src="TOS_aac_128k_fra.mp4" />

<video src="TOS_aac_128k_deu.mp4" />

<!-- other audio renditions -->

<video src="TOS_vtt_eng.ismt" />

<video src="TOS_vtt_deu.ismt" />

<!-- other subtitles renditions -->

<EventStream xmlns="urn:mpeg:dash:schema:mpd:2011"

schemeIdUri="urn:scte:scte35:2013:xml" timescale="90000">

<Event presentationTime="6292800" duration="0" id="1">

<Signal xmlns="http://www.scte.org/schemas/35/2016">

<SpliceInfoSection>

<SpliceInsert spliceEventId="1"

outOfNetworkIndicator="1"

spliceImmediateFlag="1">

<Program />

<BreakDuration autoReturn="1" duration="0" />

</SpliceInsert>

</SpliceInfoSection>

</Signal>

</Event>

<Event presentationTime="23212800" duration="0" id="2">

<Signal xmlns="http://www.scte.org/schemas/35/2016">

<SpliceInfoSection>

<SpliceInsert spliceEventId="2"

outOfNetworkIndicator="1"

spliceImmediateFlag="1">

<Program />

<BreakDuration autoReturn="1" duration="0" />

</SpliceInsert>

</SpliceInfoSection>

</Signal>

</Event>

<Event presentationTime="52956000" duration="0" id="3">

<Signal xmlns="http://www.scte.org/schemas/35/2016">

<SpliceInfoSection>

<SpliceInsert spliceEventId="3"

outOfNetworkIndicator="1"

spliceImmediateFlag="1">

<Program />

<BreakDuration autoReturn="1" duration="0" />

</SpliceInsert>

</SpliceInfoSection>

</Signal>

</Event>

</EventStream>

</par>

</seq>

</body>

</smil>However you write it (by hand or by script), store it into the output folder, alongside the MP4 renditions generated so far.

We can then execute the Unified Remix tool on that playlist, to have it prepare a single MP4 file that contains all the tracks.

docker run --rm -e UspLicenseKey -v `pwd`/source/:/input -v `pwd`/output:/data unifiedstreaming/unified_remix:latest -o /data/remixed.mp4 /data/playlist.smil

Data reference (dref) fileThis remixed.mp4 file is a “dref” file that only contains references to the other files. It also contains a metadata track with the SCTE35 data. It’s therefore very small in comparison with the other MP4 files.

Since it doesn’t contain any actual stream data itself, it will most likely not play in your video players.To check its content, use a tool like MediaInfo or ffprobe instead.

3. Package

Now we can properly perform the packaging and generation of the manifests.

3.1. HLS with TS segments

We use the Unified Packager to generate a fragmented output (with each segment in its own .ts file). It also creates a media playlist in the M3U8 format that references them.

docker run --rm -e UspLicenseKey \

-v `pwd`/source/:/input -v `pwd`/output:/data \

unifiedstreaming/mp4split:latest \

--package_hls -o hls/TOS_h264_1080p_5000k.m3u8 \

--start_segments_with_iframe \

--fragment_duration=96/25 \

--timed_metadata --splice_media \

--base_media_file TOS_h264_1080p_5000k_ \

--iframe_index_file=hls/iframe-TOS_h264_1080p_5000k.m3u8

remixed.mp4 --track_type=meta \

remixed.mp4 --track_type=video --track_filter='systemBitrate>4800000&&systemBitrate<5200000'There’s quite a bit to it:

--package_hlstells it to generate HLS with TS segments.-o hls/TOS_h264_1080p_5000k.m3u8defines the name of the media playlist file--start_segments_with_iframeensures that segments only contain full GOPs--fragment_durationtells it how long the segments must be. The easiest way to define it (in the author’s view - and it comes as a recommendation in the Unified documentation) is to use a fraction with the numerator expressing the number of frames and the denominator the frame rate. The numerator should be a multiple of the GOP size, so we choose 96 to produce 2-GOP segments of 3.84s. Note that explicitly spliced segments will have a smaller size, but still containing entire GOPs.--timed_metadataand--splice_mediaensure that the SCTE35 data containing splice points is taken into consideration to split the segments at the cuepoints--base_media_filedefines the prefix of the segment files--iframe_index_filetells the packager to also create HLS i-frame playlists for players that support fast seeking

Finally we provide Unified Packager with instructions as to where to find the relevant stream in the remixed file:

remixed.mp4 --track_type=metatells it that the timed metadata is in a track of its own.remixed.mp4 --track_type=video --track_filter="systemBitrate>4800000&&systemBitrate<5200000"tells it that the media stream is a video track, with a bitrate between 4.8 Mbps and 5.2 Mbps.

Why this expression?The order of the tracks in the output file from Remix file will not necessarily be the same as in the input SMIL file. We can’t use track order to point to the media track therefore. Instead we need to use specs about the track. For video in an ABR situation, the bitrate is usually the best indicator.

However, when we transcoded the source file with FFmpeg, and defined a bitrate of 5 Mbps, this was only a target. The effective bitrate will not exactly be that number. How far from it will depend on many factors - which is behind the scope of this document to describe.

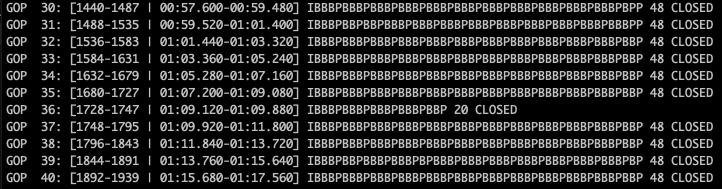

If you want to have a more precise number, you could query the FFmpeg output file with a tool like MediaInfo or FFprobe:

mediainfo output/remixed.mp4Following that you could then ask Unified Packager for an exact match

docker run ... \ remixed.mp4 --track_type=video --track_filter='systemBitrate==5075000'However the way Unified Packager determines that bitrate may be slightly different and you’ll end up with errors telling you it could not find a track.

Therefore, in the author’s experience, providing Unified Packager with a sensible range of bitrates around the encoder’s target bitrate will usually do the trick.

We repeat this with all tracks. It’s easier to specify the subtitle and audio tracks, thanks to the language tag (and the fact that there’s only one rendition per language)

# audio

docker run --rm -e UspLicenseKey \

-v `pwd`/source/:/input -v `pwd`/output:/data \

unifiedstreaming/mp4split:latest \

--package_hls -o hls/TOS_aac_128k_eng.m3u8 \

--start_segments_with_iframe \

--fragment_duration=96/25 \

--timed_metadata --splice_media \

--base_media_file TOS_aac_128k_eng_ \

remixed.mp4 --track_type=meta \

remixed.mp4 --track_type=audio --track_filter='systemLanguage=="eng"'

# subtitle

docker run --rm -e UspLicenseKey \

-v `pwd`/source/:/input -v `pwd`/output:/data \

unifiedstreaming/mp4split:latest \

--package_hls -o hls/TOS_sub_eng.m3u8 \

--start_segments_with_iframe \

--fragment_duration=9600/2500 \

--timed_metadata --splice_media \

--base_media_file TOS_sub_eng_ \

--iframe_index_file=hls/iframe-sub_eng.m3u8 \

remixed.mp4 --track_type=meta \

remixed.mp4 --track_type=text --track_filter='systemLanguage=="eng"'Finally, we need to produce the multi-variant playlist, which links to all those individual media playlists. One more packager command to do so, which should be self-explanatory:

docker run --rm -e UspLicenseKey \

-v `pwd`/source/:/input -v `pwd`/output:/data \

unifiedstreaming/mp4split:latest \

--package_hls -o hls/main.m3u8 \

hls/TOS_h264_1080p_5000k.m3u8 \

hls/TOS_h264_720p_3200k.m3u8 \

hls/TOS_h264_480p_2300k.m3u8 \

hls/TOS_aac_128k_eng.m3u8 \

hls/TOS_aac_128k_fra.m3u8 \

hls/TOS_aac_128k_deu.m3u8 \

hls/TOS_sub_eng.m3u8 \

hls/TOS_sub_deu.m3u8The job is done (for HLS at least). Have a look at the output files if you need convincing:

#EXTM3U

#EXT-X-VERSION:4

## Created with Unified Streaming Platform (version=1.12.3-28597)

# AUDIO groups

#EXT-X-MEDIA:TYPE=AUDIO,GROUP-ID="audio",LANGUAGE="en",NAME="English",DEFAULT=YES,AUTOSELECT=YES,CHANNELS="2",URI="TOS_aac_128k_eng.m3u8"

#EXT-X-MEDIA:TYPE=AUDIO,GROUP-ID="audio",LANGUAGE="fr",NAME="French",AUTOSELECT=YES,CHANNELS="2",URI="TOS_aac_128k_fra.m3u8"

#EXT-X-MEDIA:TYPE=AUDIO,GROUP-ID="audio",LANGUAGE="de",NAME="German",AUTOSELECT=YES,CHANNELS="2",URI="TOS_aac_128k_deu.m3u8"

# SUBTITLES groups

#EXT-X-MEDIA:TYPE=SUBTITLES,GROUP-ID="textstream",LANGUAGE="en",NAME="English",DEFAULT=YES,AUTOSELECT=YES,URI="TOS_sub_eng.m3u8"

#EXT-X-MEDIA:TYPE=SUBTITLES,GROUP-ID="textstream",LANGUAGE="de",NAME="German",AUTOSELECT=YES,URI="TOS_sub_deu.m3u8"

# variants

#EXT-X-STREAM-INF:BANDWIDTH=46546000,AVERAGE-BANDWIDTH=5349000,CODECS="avc1.640028,mp4a.40.2",RESOLUTION=1920x1080,FRAME-RATE=25,VIDEO-RANGE=SDR,AUDIO="audio",SUBTITLES="textstream",CLOSED-CAPTIONS=NONE

TOS_full_v2_h264_1080p_5000k.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=26580000,AVERAGE-BANDWIDTH=3484000,CODECS="avc1.640020,mp4a.40.2",RESOLUTION=1280x720,FRAME-RATE=25,VIDEO-RANGE=SDR,AUDIO="audio",SUBTITLES="textstream",CLOSED-CAPTIONS=NONE

TOS_full_v2_h264_720p_3200k.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=15783000,AVERAGE-BANDWIDTH=2547000,CODECS="avc1.4D401F,mp4a.40.2",RESOLUTION=854x480,FRAME-RATE=25,VIDEO-RANGE=SDR,AUDIO="audio",SUBTITLES="textstream",CLOSED-CAPTIONS=NONE

TOS_full_v2_h264_480p_2300k.m3u8Pretty standard.

It gets more interesting inside the media playlists:

#EXTM3U

#EXT-X-VERSION:3

## Created with Unified Streaming Platform (version=1.12.3-28597)

#EXT-X-PLAYLIST-TYPE:VOD

#EXT-X-MEDIA-SEQUENCE:0

#EXT-X-INDEPENDENT-SEGMENTS

#EXT-X-TARGETDURATION:4

#USP-X-MEDIA:BANDWIDTH=46406000,AVERAGE-BANDWIDTH=5215000,TYPE=VIDEO,GROUP-ID="video-avc1-5075",LANGUAGE="en",NAME="English",AUTOSELECT=YES,CODECS="avc1.640028",RESOLUTION=1920x1080,FRAME-RATE=25,VIDEO-RANGE=SDR

#EXT-X-PROGRAM-DATE-TIME:1970-01-01T00:00:00Z

#EXTINF:3.84, no desc

TOS_full_v2_h264_1080p_5000k_0.ts

#EXTINF:3.84, no desc

# ... skipped for legibility

#EXTINF:3.84, no desc

TOS_full_v2_h264_1080p_5000k_17.ts

#EXTINF:0.8, no desc

TOS_full_v2_h264_1080p_5000k_18.ts

## splice_insert(auto_return)

#EXT-X-DATERANGE:ID="1-69",START-DATE="1970-01-01T00:01:09.920000Z",PLANNED-DURATION=0,SCTE35-OUT=0xFC302000000000000000FFF00F05000000017FFFFE000000000000000000007A3D9BBD

#EXT-X-CUE-OUT:0

#EXT-X-CUE-IN

#EXT-X-PROGRAM-DATE-TIME:1970-01-01T00:01:09.920000Z

#EXTINF:3.84, no desc

TOS_full_v2_h264_1080p_5000k_19.ts

#EXTINF:3.84, no desc

TOS_full_v2_h264_1080p_5000k_20.tsYou notice a few things:

- Most segments are 3.84s, as instructed

- Before the first splice point, a 0.8s segment is inserted.

- Unified Packager added HLS tags that reflects the fact that a 0-duration splice has been performed.

SCTE35 supportThis information is - at the time of writing - not used by broadpeak.io to determine where to insert ads in VOD. But it’s sure useful to troubleshoot.

You’ll notice the exact same splice points in the other media playlists, including for subtitles.

3.2 DASH with fMP4

To package into DASH, Unified Packager requires each track to be contained into a CMAF file (another variation on ISOBMFF). So, we need to go through all tracks in the remixed file, and create those files.

docker run --rm -e UspLicenseKey \

-v `pwd`/source/:/input -v `pwd`/output:/data \

unifiedstreaming/mp4split:latest \

-o TOS_h264_1080p_5000k.cmfv \

--fragment_duration=96/25 \

--timed_metadata --splice_media \

remixed.mp4 --track_type=meta \

remixed.mp4 --track_type=video --track_filter='systemBitrate>4800000&&systemBitrate<5200000'This looks similar to the way we packaged HLS into individual media playlists before. Just note the “.cmfv” extension for the output file.

Treat all the other tracks in the same way:

# audio

docker run --rm -e UspLicenseKey \

-v `pwd`/source/:/input -v `pwd`/output:/data \

unifiedstreaming/mp4split:latest \

-o TOS_aac_128k_eng.cmfa \

--timed_metadata --splice_media \

--fragment_duration=96/25 \

remixed.mp4 --track_type=meta \

remixed.mp4 --track_type=audio --track_filter='systemLanguage=="eng"'

# subtitle

docker run --rm -e UspLicenseKey \

-v `pwd`/source/:/input -v `pwd`/output:/data \

unifiedstreaming/mp4split:latest \

-o TOS_sub_eng.cmft \

--fragment_duration=96/25 \

--timed_metadata --splice_media \

remixed.mp4 --track_type=meta \

remixed.mp4 --track_type=text --track_filter='systemLanguage=="eng"Now it remains to generate the DASH manifest.

docker run --rm -e UspLicenseKey \

-v `pwd`/source/:/input -v `pwd`/output:/data \

unifiedstreaming/mp4split:latest \

--package_mpd -o dash/main.mpd \

--mpd.profile=urn:mpeg:dash:profile:isoff-live:2011 \

--timed_metadata \

TOS_h264_1080p_5000k.cmfv \

TOS_h264_720p_3200k.cmfv \

TOS_h264_480p_2300k.cmfv \

TOS_aac_128k_eng.cmfa \

TOS_aac_128k_fra.cmfa \

TOS_aac_128k_deu.cmfa \

TOS_sub_eng.cmft \

TOS_sub_deu.cmftAlthough it’s again very similar to the way we generated the multivariant playlist for HLS, it warrants a few explanations:

--package_mpdtells the packager that we want to generate MPEG-DASH (a Media Presentation Document)--mpd.profile=urn:mpeg:dash:profile:isoff-live:2011indicates that we want the output to be fragmented (that is: made of individual files for each segment). This is a necessity to use a server-side ad insertion technology like broadpeak.io.

Again, let’s look at the output to validate our work:

<?xml version="1.0" encoding="utf-8"?>

<!-- Created with Unified Streaming Platform (version=1.12.3-28597) -->

<MPD

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns="urn:mpeg:dash:schema:mpd:2011"

xsi:schemaLocation="urn:mpeg:dash:schema:mpd:2011 http://standards.iso.org/ittf/PubliclyAvailableStandards/MPEG-DASH_schema_files/DASH-MPD.xsd"

type="static"

mediaPresentationDuration="PT12M14S"

maxSegmentDuration="PT5S"

minBufferTime="PT10S"

profiles="urn:mpeg:dash:profile:isoff-live:2011,urn:com:dashif:dash264">

<Period

id="1"

duration="PT12M14S">

<AdaptationSet

id="1"

group="1"

contentType="audio"

lang="de"

segmentAlignment="true"

audioSamplingRate="24000"

mimeType="audio/mp4"

codecs="mp4a.40.2"

startWithSAP="1">

<AudioChannelConfiguration

schemeIdUri="urn:mpeg:dash:23003:3:audio_channel_configuration:2011"

value="2" />

<Role schemeIdUri="urn:mpeg:dash:role:2011" value="main" />

<SegmentTemplate

timescale="48000"

initialization="main_timed-$RepresentationID$.dash"

media="main_timed-$RepresentationID$-$Time$.dash">

<SegmentTimeline>

<S t="0" d="184320" r="17" />

<S d="38912" />

<S d="145408" />

<S d="184320" r="46" />

<S d="215040" />

<S d="153600" />

<S d="184320" r="78" />

<S d="121728" />

</SegmentTimeline>

</SegmentTemplate>

<Representation

id="audio_deu=122000"

bandwidth="122000">

</Representation>

</AdaptationSet>

<!-- ... remainder removed for legibility -->

</Period>

</MPD>The information we’re after to prove that conditioning has taken place is to be found in the SegmentTimeline. It indicates that there are first segments of 3.84 seconds (184230 / 48000) repeated 17 times, followed by a segment of 0.81 second (38912 / 48000). This matches what we were seeing in HLS. And 18 segments at 3.84s + 0.81s gives us the 69.92s that is our first splice point.

3.3 Push to the Origin

Before the content can be used by broadpeak.io, it needs to be stored on an origin. Any static origin will do. You could for example use AWS S3 or Google Cloud Storage.

Upload to it the complete "hls" and "dash" folders created by Unified Packager.

4. Simulate an ad server

For ad insertion in VOD, the broadpeak.io solution requires the ad server to return a VMAP payload, which defines the VAST tag for each relevant insertion point (pre-roll, mid-rolls and post-rolls). The timing in that VMAP payload must match the cuepoints / splice points, for ad insertion to be performed accurately.

To simulate an ad server for the purpose of this playbook, we will create a static VMAP payload and store it into a “vast.xml” file:

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<vmap:VMAP xmlns:vmap="http://www.iab.net/vmap-1.0" version="1.0">

<vmap:AdBreak timeOffset="start" breakType="linear" breakId="pre">

<vmap:AdSource id="pre">

<vmap:AdTagURI templateType="vast3">

<![CDATA[https://bpkiovast.s3.eu-west-1.amazonaws.com/vastbpkio]]>

</vmap:AdTagURI>

</vmap:AdSource>

</vmap:AdBreak>

<vmap:AdBreak timeOffset="00:01:09.920" breakType="linear" breakId="mid1">

<vmap:AdSource id="mid1">

<vmap:AdTagURI templateType="vast3">

<![CDATA[https://bpkiovast.s3.eu-west-1.amazonaws.com/vastbpkio]]>

</vmap:AdTagURI>

</vmap:AdSource>

</vmap:AdBreak>

<vmap:AdBreak timeOffset="00:04:17.920" breakType="linear" breakId="mid2">

<vmap:AdSource id="mid2">

<vmap:AdTagURI templateType="vast3">

<![CDATA[https://bpkiovast.s3.eu-west-1.amazonaws.com/vastbpkio]]>

</vmap:AdTagURI>

</vmap:AdSource>

</vmap:AdBreak>

<vmap:AdBreak timeOffset="00:09:48.400" breakType="linear" breakId="mid3">

<vmap:AdSource id="mid3">

<vmap:AdTagURI templateType="vast3">

<![CDATA[https://bpkiovast.s3.eu-west-1.amazonaws.com/vastbpkio]]>

</vmap:AdTagURI>

</vmap:AdSource>

</vmap:AdBreak>

</vmap:VMAP>It stipulates a pre-roll ad, and a mid-roll ad for each of our cuepoints

For simplicity, we’ll upload it to the same origin server as the streams.

AdProxy for VMAP generationℹ️ If your ad server does not support the generation of VMAP, or is not able to manage different cuepoints for different assets, you could consider the broadpeak.io AdProxy (VMAP Generator) feature, which allows you to dynamically work with a standard VAST-compliant ad server. See Ad Proxy for details.

5. Configuration in broadpeak.io

The hard work has been done, it just remains to configure the broadpeak.io solution.

5.1. Asset Catalog Source

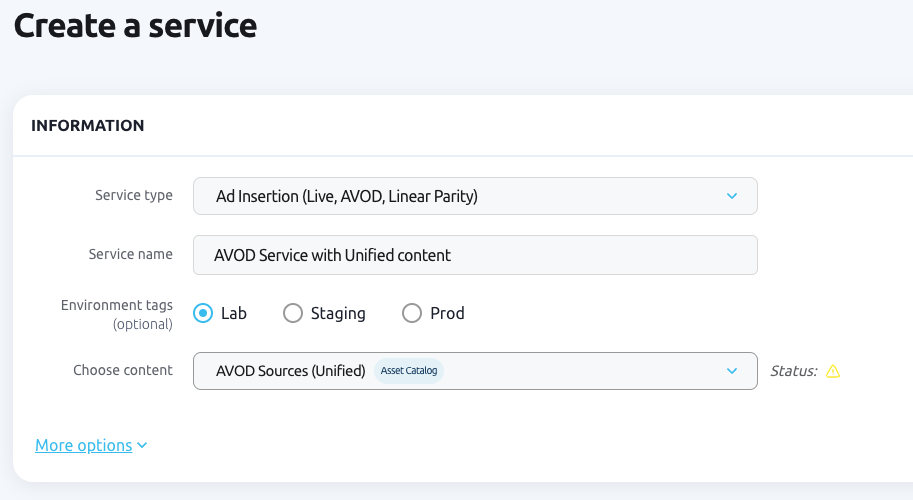

The broadpeak.io Dynamic Ad Insertion solution for AVOD requires an Asset Catalog source. We’ll point it to the root folder, which will allow multiple assets stored side-by-side to be supported through the same service, regardless of format

The warning Status sign may appear, complaining about inconsistent HLS versions between the multi-variant and media playlists. You can safely ignore it.

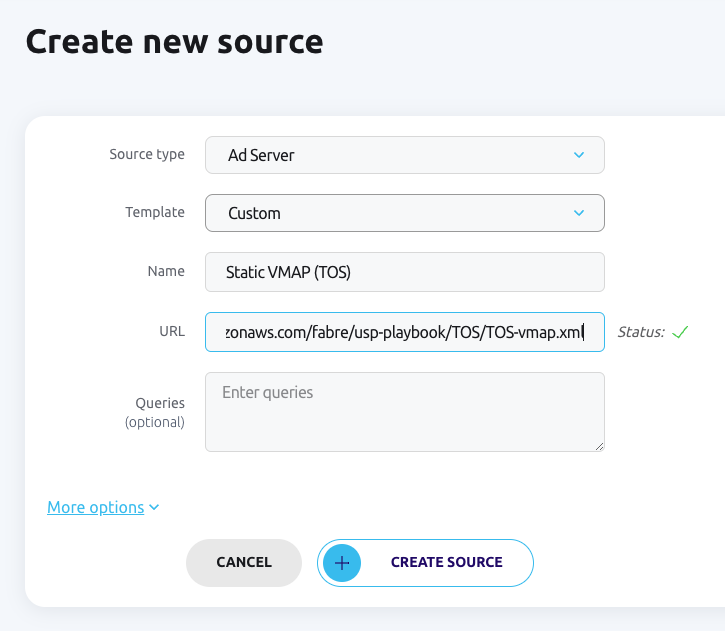

5.2 Ad Server

Since we’re using a static VMAP file to simulate an Ad Server, we can just use the URL to the file on the origin.

5.3 DAI Service

It’s now a simple question of creating a Service that links these resources together.

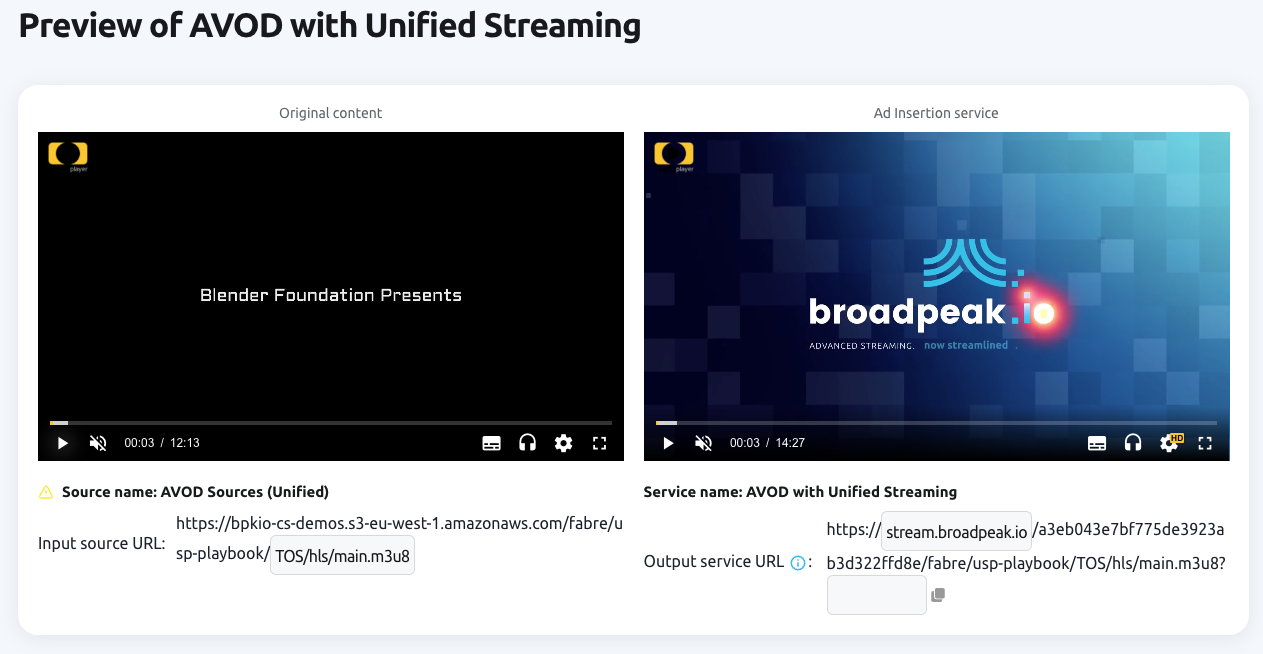

6. Play

We can now head to the preview page, and play the source and the service side by side.

Ad transcoding timeNote that the first time, ads will likely not be inserted. They first need to be transcoded by broadpeak.io before they can be inserted. Try again after a short while, and they should start showing.

Straight away, you should see a pre-roll, as defined in the VMAP payload. If you scrub the player with the service URL, you should also see mid-rolls at roughly 01:43, 05:35 and 11:57. Those times are what we expect given the cuepoint times of the original asset, and the length of the ad referred to by the VAST tag in the VMAP payload, which is 33.320s:

- Mid-roll 1 at 01:43.240 = cuepoint of 01:09.920 + 1x 33.320s (pre-roll)

- Mid-roll 2 at 05:25.560 = cuepoint of 04:17.920 + 2x 33.320s (pre-roll and mid-roll 1)

- Mid-roll 3 at 11:28.360 = cuepoint of 09:48.400 + 3x 33.320s (pre-roll and mid-rolls 1 and 2)

To test the streams in your own players, you need to work out the service stream URLs, which are simply:

- the stream base URL (as can be copied from the Web app, or retrieved from the APIs)

- to which you append the relative path to the packaged manifest, relative to the base URL of your Asset Catalog

So, in this example:

- “https://stream.broadpeak.io/a3eb043e7bf775de3923ab3d322ffd8e/fabre/usp-playbook/” + “TOS/hls/main.m3u8” for HLS

- “https://stream.broadpeak.io/a3eb043e7bf775de3923ab3d322ffd8e/fabre/usp-playbook/” + “TOS/dash/main.mpd” for DASH

Use a CDN distributionAfter your initial tests, you will need to create a CDN distribution to serve your content, in line with the standard broadpeak.io requirements. It is beyond the scope of this playbook.

Updated 6 months ago